The Center for Long-Term Cybersecurity has produced an animated “explainer” video about differential privacy, a promising new approach to privacy-preserving data analysis that allows researchers to unearth the patterns within a data set — and derive observations about a population as a whole — while obscuring the information about each individual’s records.

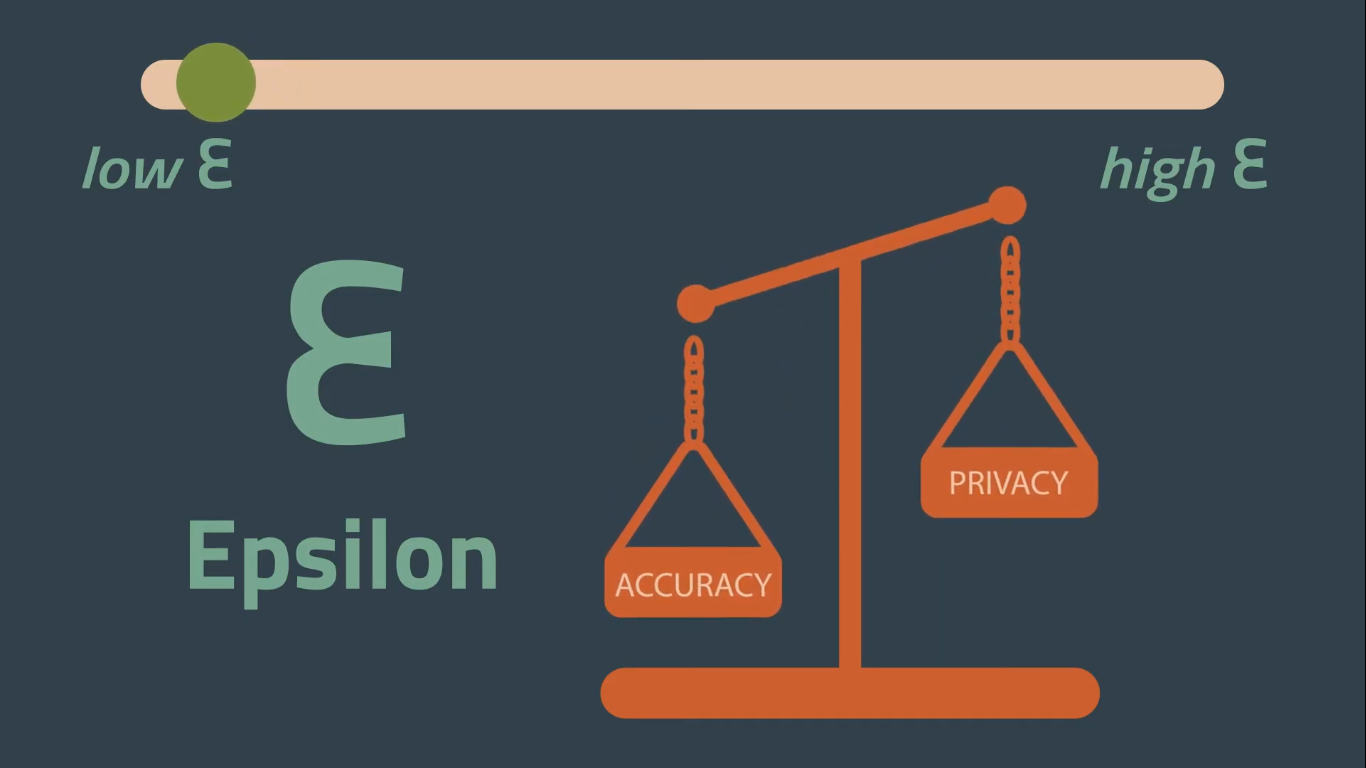

As explained in more detail in a post on the CLTC Bulletin — and on Brookings TechStream — differential privacy works by adding a pre-determined amount of randomness, or “noise,” into a computation performed on a data set. The amount of privacy loss associated with the release of data from a data set is defined mathematically by a Greek symbol ε, or epsilon: The lower the value of epsilon, the more each individual’s privacy is protected. The higher the epsilon, the more accurate the data analysis — but the less privacy is preserved.

Differential privacy has already gained widespread adoption by governments, firms, and researchers. It is already being used for “disclosure avoidance” by the U.S. census, for example, and Apple uses differential privacy to analyze user data ranging from emoji suggestions to Safari crashes. Google has even released an open-source version of a differential privacy library used in many of the company’s core products.