A CLTC panel focused on shifting the paradigm around data and privacy.

Emerging technologies call for new policies and business models that increase the value of the data they generate while also preserving privacy and security. Internet of things (IoT) devices, digital assistants, ubiquitous sensors, and augmented/virtual reality (AR/VR) environments need regulation and markets at the same time. How do we manage tradeoffs and risk tolerance? How can academic research and policy interventions contribute to optimal outcomes in this space?

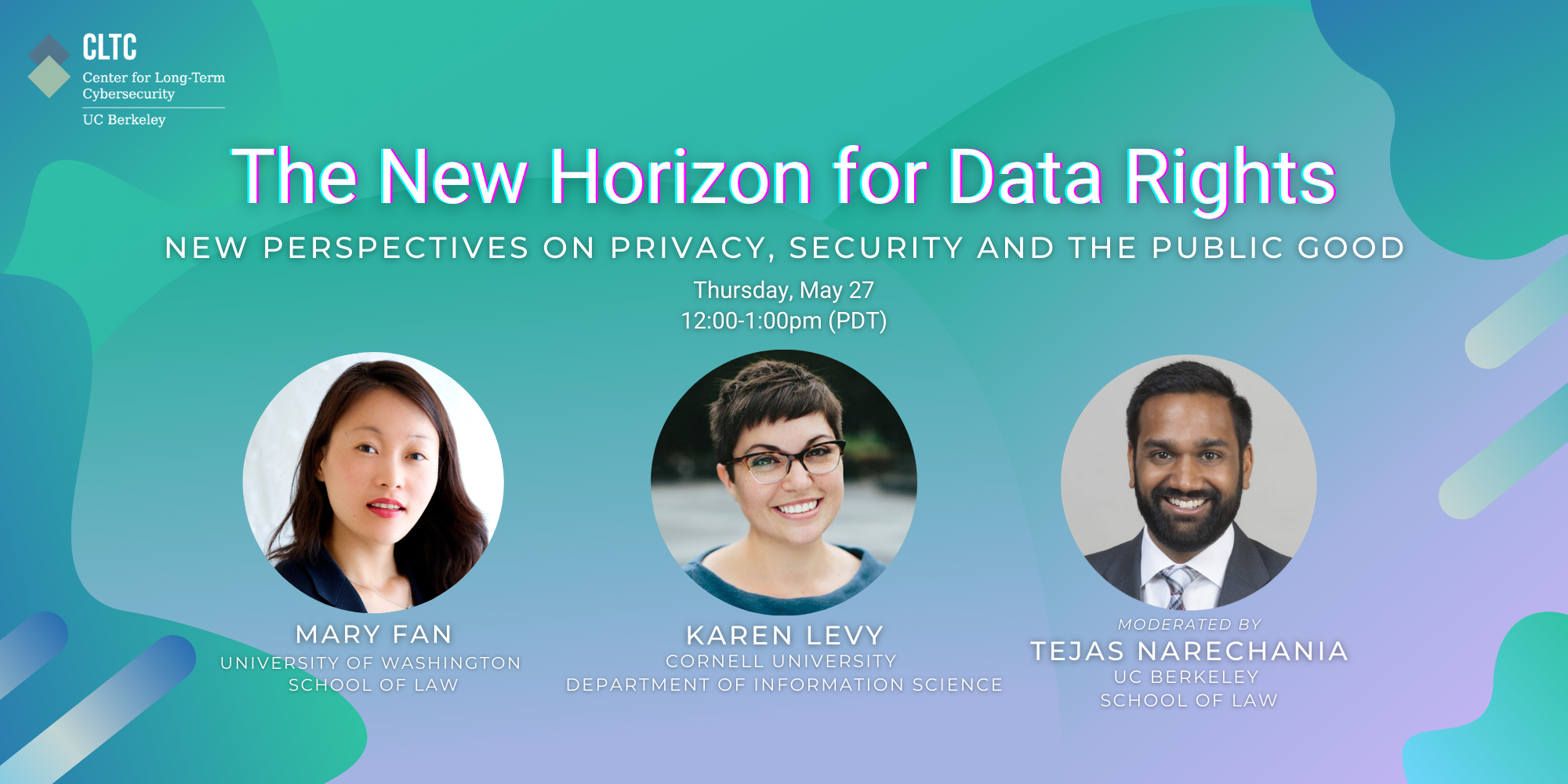

On May 27, 2021, CLTC presented an online conversation featuring two scholars — Mary Fan, Henry M. Jackson Professor of Law at the University of Washington, and Karen Levy, Assistant Professor in the Department of Information Science at Cornell University — who are at the forefront of thinking about these issues. The conversation was moderated by Tejas Narechania, Robert and Nanci Corson Assistant Professor of Law at the UC Berkeley School of Law and Faculty Director of the Berkeley Center for Law & Technology. The panel was introduced by Richmond Wong, a Postdoctoral Fellow at CLTC.

Fan has a paper forthcoming in the NYU Law Review that “frames a right of the public to benefit from our privately held consumer big data,” she explained. “We contribute data to a massive growing pool every time we use the internet…. The information could be powerfully deployed, not just for private profit, but also public benefit, such as predicting and preventing disease, or understanding the spread of information, if that data is accessible for use. My piece draws on insights from property theory, regulatory advances, and open innovation, and introduces a model that permits controlled access and use of our pool of consumer big data for public-interest purposes, while protecting against privacy and related harms.”

Levy studies the legal, organizational, social, and ethical aspects of data-intensive technologies, particularly as they affect work and workers; she is writing a book analyzing the emergence of electronic monitoring in the long-haul trucking industry. “I’m a sociologist by training, and so I get really excited about the messiness of social life and how it intersects with the legal rights and privacy and other rights that relate to technology use, and how those intersect or are complicated by the way people relate to one another, the way people hold power over one another,” she explained. “All of my work follows that thread and tries to pull on different ways in which that messiness complicates our rights and privacy.”

Following are some of the questions and responses from the discussion. Please note that responses have been edited for length and content. Watch the video above to see the full presentation.

Tejas: A lot of us tend to think about privacy and data from a largely individual perspective, especially in the US, but your work broadens that perspective, beyond individual control to data in society as an artifact of social relationships. Why do you think it’s important to focus on these externalities of social relationships, and what are some of the key insights that we might get from doing that?

Levy: You’re right that there has been a lot of legal theory work about how we tend to see privacy as an individualistic right. But in fact, there are many societal values to privacy. We all do better in a society that has privacy. Privacy supports all kinds of really important modes of human development and establishing democracy. We should all care about each other’s privacy from the baseline because it helps to establish a more healthy society, even if we’re not directly implicated. So it’s a common concern.

In the law, the notice-and-consent model is the dominant mode of regulating privacy in the United States, and that model has been critiqued for lots of different reasons. One critique is that it posits this individual “end user,” but in fact, we know there is no end user. The end user is a network of different related people, the people who make decisions about what data are transmitted, and they stand in different power relationships to the people around them.

There is interesting work in information science about smart home devices, for example if you have a webcam or a smart doorbell, and what the privacy settings are going to be. Those technologies implicate a lot of people other than the homeowner, but it tends to be the more powerful person or the person with financial control who gets to make those choices. So if we think about kind of where the harms and benefits may accrue, there are much broader dynamics at play. And that’s not really a good fit for how we’ve traditionally thought about the way the law functions.

Fan: The privacy protection framework is intuitively, “I want more control over my personal information.” And that’s the predominant lens of regulatory advances and proposals that we see today. But what my work is trying to argue is that control over our individual data misses the larger overarching question about who gets to use and benefit from the valuable amassed information about us, which is currently often held and protected under copyright law and trade secret law by private companies. Each individual data point is just a molecule in the sea of what is valuable, powerful, as well as potentially dangerous and beneficial about the pooled data points. It’s our individual single data point that we intuitively care very deeply about, because it’s ours.

My work currently looks at what the individual privacy paradigm misses, and how you can get unintended adverse consequences when you have this predominant individual privacy lens, and you miss the ocean of value in terms of the collective interest in this pooled data. These are negative consequences of the prevailing model of data control, the individual control model.

Each individual data point is just a molecule in the sea of what is valuable, powerful, as well as potentially dangerous and beneficial about the pooled data points. It’s our individual single data point that we intuitively care very deeply about, because it’s ours.

Levy: Last year I co-wrote a paper in the Washington Law Review on privacy dependencies, the idea that people’s privacy depends on the decisions and disclosures that other people make, which is somewhat intuitive. What we do and say can reveal a lot about other people, even when what we are sharing is only about us. If I post a picture of my daughter to Instagram, that’s me passing my daughter’s data. If I submit my genetic material to a genealogy website, that implicates the privacy of all my relatives. We see these things popping up all over the place.

In other situations, it’s more subtle, because these dependencies can be based on our similarities or differences from people who we actually don’t know at all. There’s social science research showing that we can infer a lot about people, even when they take steps to protect sensitive data. We can infer with a high degree of accuracy what someone’s sexual identity is based on the sexual identities of their friends who have revealed that information. Or we can generalize what I’m likely to buy based on what other people of my age and socio-economic group are likely to buy. These are somewhat intuitive, but we under-appreciate it when we think about privacy, because oftentimes that provides this route around thinking about consent. You no longer need my consent if you can gather information about me with a high degree of accuracy anyway.

It raises important open questions: if we accept that this is everywhere, how do we deal with it? How do we want to regulate differently if we acknowledge that the individualistic model doesn’t take us where we need to go? To the question of externalities, there’s a lot of inspiration to be taken from environmental law, from areas like joint property and other contexts in which we think about this diffusion of harms across across multiple parties.

Tejas: Mary, your paper talks about how sensitive data can be used for big public-interest purposes, like epidemiological studies, and that there are ways we can draw from existing protocols without trampling on individual privacy. Maybe you could say a little bit more about what you think some of those goods are, and what are the potential dangers of existing privacy laws and structures.

Fan: There are a lot of important questions that we need access to privately controlled data to answer. For example, how do data information flows influence the rise of violent extremism and domestic terrorism? How do internet bots and trolls spread viral patterns of misinformation, and potentially even influence vital decisions, such as presidential elections or COVID-19 vaccine refusal? How might our data be used to filter advertisements or even the prices that we get for our goods? The question is, how do we partake in the benefits of this data? How do we as consumers share its benefits?

Big brother doesn’t have all the information, or even the means of expertise, of harnessing that information the way private actors do today. In fields like law, epidemiology, and misinformation studies, there are questions we’d want to answer if we had access to the data. But the problem is, we’re seeing these well-intentioned laws strongly shaped by an individual privacy focus. That’s overlooking and, potentially worse, impeding public interest uses of this pool of personal data.

I use as a prime example the European Union’s General Data Privacy Regulation (GDPR), which has as its primary paradigm the commercial use of consumer data, but is also sending alarm waves about impeding life-saving biomedical and health research. Private companies like Facebook may want to share their data with social scientists. But the threat of multi-million dollar sanctions is a major deterrent to sharing important information.

When that information is shared (for example, Facebook recently released one of the largest social science databases ever assembled), researchers were super excited about accessing this data. But to comply with regulations that don’t adequately distinguish between public-interest uses versus private profit-making, the data is obscured using differential privacy techniques, introduction of noise and censoring techniques that basically render the ability to draw research conclusions difficult, and potentially impossible when it comes to smaller group sizes. Fundamentally, there needs to be a distinction and an understanding that this data has a public resource nature to it.

Tejas: Let’s turn from theory to policy. If you had President Biden’s desk for a minute and you could draft an executive order and have him sign it, or you could get Congress to pass something, what would it be? What’s the next step for policymakers?

Fan: I’d love to see recognition that that this pool consumer data that we generate in the digital economy has a public resource nature to it, and the public should enjoy rights of what I’ve called “controlled access and benefit.” It’s not a free-for-all, but it’s controlled access and benefit from this public resource.

Levy: The most important point for policymakers is to recognize how intertwined our fates are with privacy and to better understand the impact of regulation in this area on social solidarity and on trust. The wrong approach would be to say, we know that our privacy is all dependent on each other’s choices, and therefore, we’re just going to double down on notice and consent and say, when you sign a privacy policy, you need to think about others whose data may be implicated. That would be the wrong decision, knowing what we know about how difficult it is for people to regulate their own privacy choices, when they’re only charged with thinking about themselves.

We should think about where the burdens are going to lie with this kind of decision-making and whether that’s the appropriate balance. In breaking out of this idea of the “end user,” we might take inspiration from research ethics. If I do a study and I go to my institutional review board (IRB) and I say, I’m going to interview these 20 people, the common rule is that, in addition to my research participants, there are secondary participants. If I’m asking questions about someone’s dad, that dad — whom I didn’t directly interact with — is still somebody my IRB is supposed to take into account when deciding if I can do this study. That feels like a model for approaching some of these dependencies problems that doesn’t place all the onus on this party stuck in the middle.

Tejas: In reading your papers, I was thinking of Albert Hirschman’s book, Exit, Voice, and Loyalty. I was thinking about, how much control do I have over my ability to enter into certain relationships? It’s the idea that the dependencies among privacy mean that sometimes I have no control over my ability to even enter into the relationship to begin with. But it also means that I have less control over my ability to exit from relationship. And it also means that my voice in the management and control of that relationship is greatly diminished. So what are we learning, and what is old that is reflected here?

Levy: I love Hirschman and I love that you bring that up. I completely agree that this stuff is not actually all that new. It’s like old wine in new bottles. Social life has been messy for a long time. People have kept secrets for a long time. The scale and the nature of the information being shared is obviously different. You could say that’s a qualitative shift from where things were 20 or 25 years ago, and that matters. But I’m far from the first person to make this point.

It underscores the fact that tech policy is not really tech policy. Tech policy is family policy and economic policy and workplace policy. It’s a route into these bigger, broader and ultimately, intractable questions. Which is not to say there’s no progress to be made here. But my general response to anyone who tries to say that any tech policy question is easy, or has an obvious answer, is that they’re fooling themselves or that they’re trying to fool you. These are always going to be messy balancing acts.

The positive thing to take away is that we should take into account in policymaking that preferences are going to change over time. We’re just starting to reckon with the necessity of allowing for people’s privacy preferences to change in important ways, because they’re so deeply embedded in social life, which is so messy and complicated.

We’re just starting to reckon with the necessity of allowing for people’s privacy preferences to change in important ways, because they’re so deeply embedded in social life, which is so messy and complicated.

Fan: Human civilization is so long, and we’ve been grappling with the same deep, big questions. It just takes new configurations as our lives and our culture and technology change. But there are fundamental big questions that our minds tend to grapple with, and one of them is the individual versus the community. How do we protect and define the community? And how do we have community safety or shared interests? In America, we have this powerful culture of individualism, and technology accelerates that somewhat. It actually accelerates some of the tendencies that we’ve long observed in our culture. What our shared collective interests? How do we define the collective interests? What is the collectivity? How is it manufactured? And what is the role of the individual versus the group?

Tejas: As a basic institutional design question, what do we want policymakers to do about this? And how do you design an institution that accounts for diffuse policies to come up with a shared definition of what’s good? What’s the institution that does this the best?

Fan: What you need is a process by which, even if you don’t agree with the ultimate outcome, you respect the process, you respect the representation on the boards that reach the process. And so we have models from various domains of law that grapple with controversial questions that set forth representation from a cross section of the affected community. In my particular case, where we’re talking about private data, that cross section should include public but also private representatives, because there are multiple interests and stakeholders and you need buy in. So it’s about the the reality and the perception of legitimacy, particularly in these contested areas, and representation matters.

Levy: The word “participatory” has become a watch-word of the way we should do things, that having the voices of affected communities at the table is the floor right. But there are questions like, how do we ensure that’s not tokenistic? What does it mean to be representative? Who are we representing? And along what axes? How do we decide what counts? Those are really hard questions.

I’ll shout out the work of one of my PhD students, Fernando Delgado, who wrote a policy proposal for the Biden administration under the Day One project that is a community-centered agenda for AI, which is about how federal funding might be best put to use to foster those types of community-led projects. That seems like a good first step. It’s not going to solve all these problems of how you decide, but it is recognizing that maybe the way to answer these questions is through fostering community-led work, rather than the top-down model that we have for funding now, which is largely serving the agenda of market concentration.