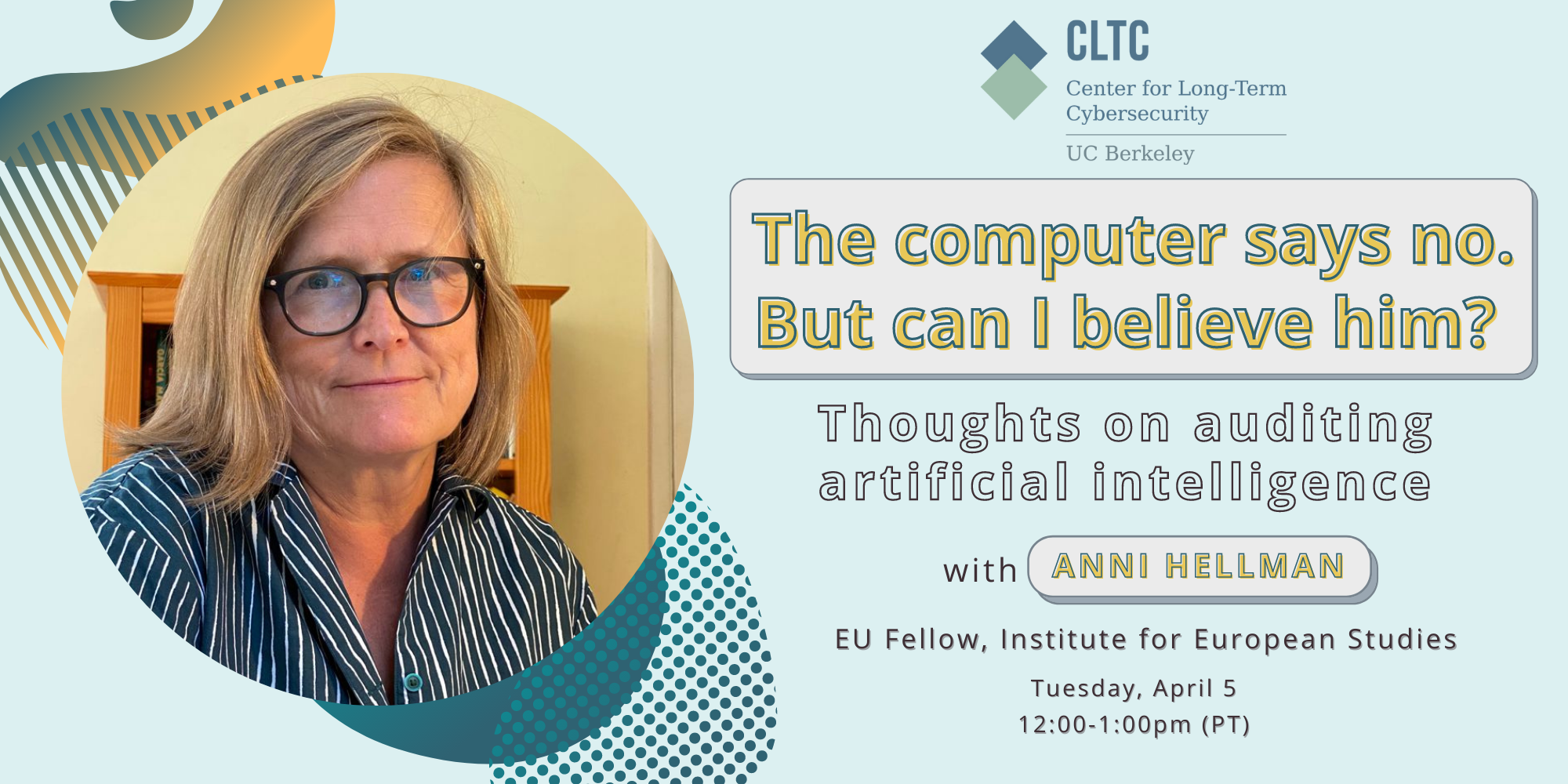

On April 5, 2022, CLTC hosted a presentation by Anni Hellman, a Deputy Head of Unit at the European Commission who is currently an EU Fellow at UC Berkeley. Hellman’s talk, which was co-sponsored by the Institute of European Studies, the Berkeley Institute for Data Science (BIDS), and the UC Berkeley Center for Human-Compatible Artificial Intelligence, focused on the role of audits in promoting fairness in artificial intelligence-based systems.

Hellman’s talk was inspired in part by the European Commission’s proposed Artificial Intelligence Act to the European Parliament and Council, which details requirements for AI-based software that would be used in legislative areas considered to be “high risk,” meaning they could “create adverse impact on people’s safety or their fundamental rights.” How can compliance with these requirements be verified, Hellman asked, and can that be done ex post, or is an ex ante audit necessary?

In her talk, Hellman — a mathematician, qualified insurance actuary, and a Fellow of the Actuarial Association of Finland — provided an overview of key questions related to how and whether the trustworthiness and fairness of artificial intelligence and algorithms can be verified.

“AI is of course already around us,” Hellman explained. “It is not the time to discuss whether we want to have AI in the world or not. It is there…. It’s created to solve problems where human work is too lengthy or laborious or takes too much time. But we have to remember that AI is also basically created to make profits for its owners. It uses masses of data from people who are not aware that their data is actually being used. It’s used to micro-target online citizens for good and bad purposes. And what AI does is classify subjects and make personalized decisions. But we don’t really have a handle on to why and how it does this. This is the ethical problem of AI.”

Hellman noted that the lack of transparency in AI systems often adds to the complexity of determining their fairness. “What I have been looking at is audit as a tool to verify whether AI is ethical, or whether it’s doing what it shouldn’t be,” she explained. “An audit is defined as a standardized process that aims at identifying risks and vulnerabilities and verifying that there are sufficient internal controls to mitigate those risks, and keep those risks at an acceptable level.”

Many AI systems rely on”black box” algorithms that rely on machine learning, Hellman noted, and thus can be difficult to untangle. “The first aim of the audit is to understand what the AI wants to do,” Hellman said. “Then it has to sample and test and interview people subjected to the AI to understand how they perceive it.”

An audit of an artificial intelligence-based system also requires looking at the data used to train the system, Hellman said. “Does the AI treat minorities equally? And is it fair? And how about exceptions? Training data should be representative of the whole population, have sufficiently low level of errors, and have enough information for each individual,” Hellman said, noting that data must be up to date and as free of errors as possible.

An audit should also be able to “verify how the AI treats anomalies and exceptions” and “recommend human oversight,” Hellman said. “Ethics and fairness are not unambiguous. Correct data may not be enough to ensure ethical responses. And poor training data also brings problems…. Sometimes human oversight is needed. An exception may cause reputational risk for AI. So I think we need an ethical-by-design approach to AI development.”

Hellman noted that the European Commission’s proposal on AI was developed “to make sure that Europeans can trust the AI that is used in Europe,” but she noted that such regulations will benefit from sufficiently robust auditing processes.” There has to be adequate risk assessment and mitigation systems in place,” she said. “There need to be appropriate human oversight measures in place to minimize the risks so humans can take over in risky situations, and there should be a high level of robustness, security, and accuracy.”

“The process for launch of an AI application in Europe in high-risk areas is quite demanding,” Hellman added. “There will be some kind of need for a third-party, ex ante audience. Somebody has to go through them and verify whether they are appropriate, considering the legislation. That also means that we need to have competent AI auditors with a set of qualifications. And there needs to be an AI authority or authorities who then approve the AI that launches in Europe.”

Watch the full talk (including questions and answers) above or on YouTube.