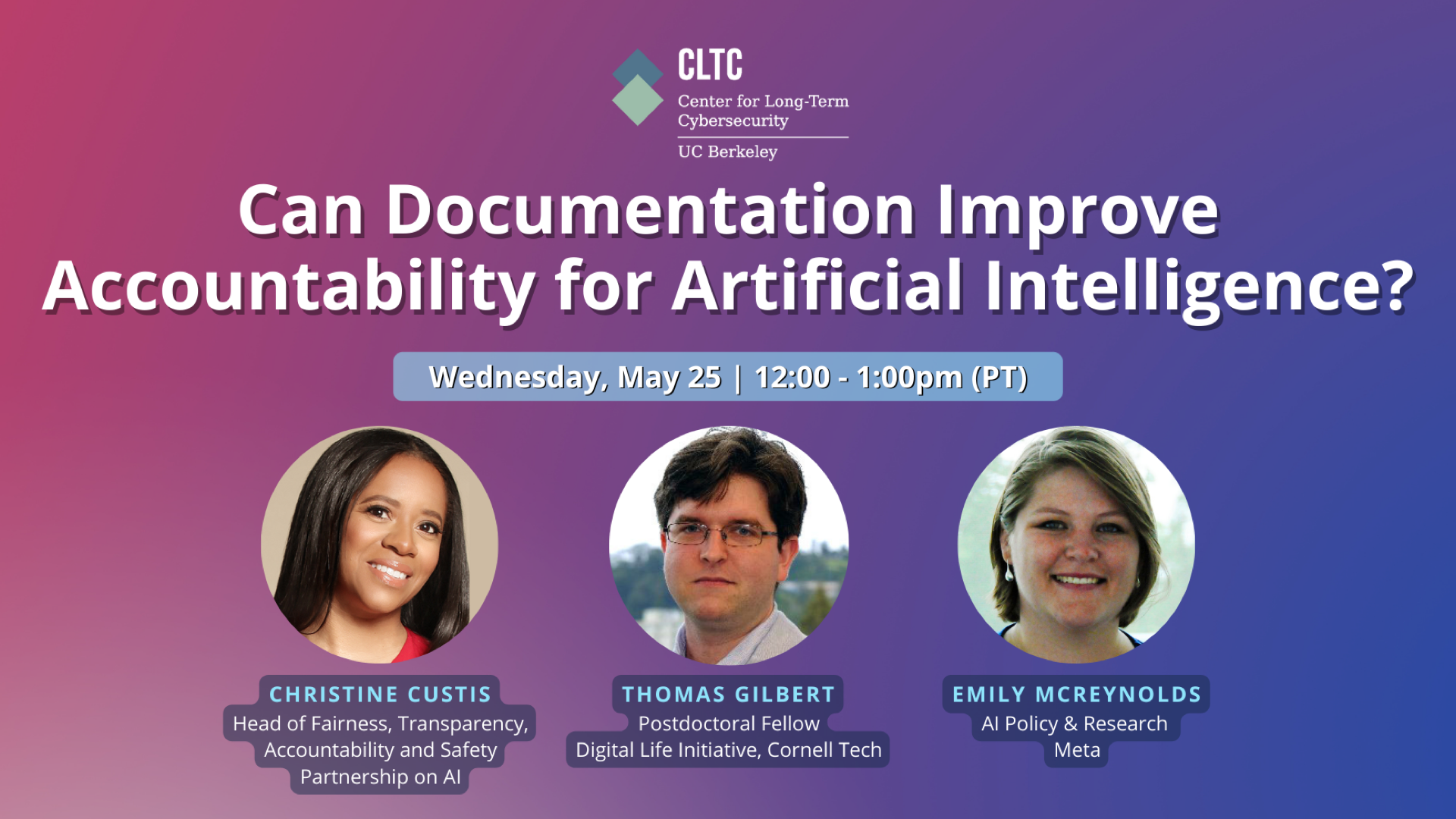

Diverse processes and practices have been developed in recent years for documenting artificial intelligence (AI), with a shared aim to improve transparency, safety, fairness, and accountability for the development and uses of AI systems. On May 25, 2022, CLTC’s AI Security Initiative presented an online panel discussion on the current state of AI documentation, how far the AI community has come in adopting these practices, and new ideas to support trustworthy AI well into the future.

Moderated by Jessica Newman, Director of the AI Security Initiative and Co-Director of the UC Berkeley AI Policy Hub, the panel featured Emily McReynolds, who is on the AI Policy and Research team for Meta AI; Thomas Gilbert, Postdoctoral Fellow at the Digital Life Initiative at Cornell Tech; and Christine Custis, Head of ABOUT ML & Fairness, Transparency, Accountability, and Safety at the Partnership on AI.

“Documentation can be a powerful tool to reduce the information asymmetries that are typical with AI deployments, and give potential users a better set of expectations about the characteristics, limitations, and risks of the system they’re interacting with,” Newman said in her introductory remarks. She noted, however, that “documentation of AI is still far from standardized or universal. Outstanding questions include at what points throughout the AI lifecycle to document, who the documentation is primarily for, and whether and how it does in fact promote sufficient transparency and accountability for the development and use of AI systems.”

Dr. Christine Costas introduced ABOUT ML, short for “Annotation and Benchmarking on Understanding and Transparency of Machine Learning Lifecycles,” an initiative of the Partnership on AI that aims to bring together a diverse range of perspectives to develop, test, and implement machine learning system documentation practices at scale.

“It’s a very simple approach to actioning responsible AI documentation throughout the entire machine learning development and deployment lifecycle, throughout the curation and collection of data, throughout the use and maintenance of the data, and throughout the development of the algorithms, models, testing, and evaluation,” Costas said. “There are all sorts of opportunities to keep a record of what you’re doing, and to be transparent about what you’re doing.”

Emily McReynolds, who leads Meta’s research on responsible AI with academia and civil society, discussed Meta’s AI and ML explainability documentation projects, including system cards and method cards. Reynolds described the “journey” that she and her team are undertaking to move toward transparency for a range of audiences.

“There’s a challenge around who you’re explaining what to,” Reynolds said. “One of the first things we did was hold a series of workshops. We had a ‘design jam’ to think about, what would be helpful for explaining the platforms and products that Meta has? One of the first things that came out of that was an approach to what we call ‘levels of explainability.’ It was thinking through, how do we address the different levels of explanation needed, everything from understanding that AI exists to explaining a decision.”

Tom Gilbert, a past CLTC grantee who previously received an interdisciplinary PhD in machine ethics and epistemology from UC Berkeley, discussed his recent work on developing “reward reports,” which he and other researchers detailed in a CLTC white paper. Reward reports focus on improving transparency in reinforcement learning systems in particular. “What you have with reinforcement learning is…an agent that is teaching itself how to navigate an environment that’s been specified by the designer. And often the agent, because it’s so smart, will learn things and experience that environment in ways that the designer doesn’t anticipate. And so that poses a major question for documentation.”

Unlike other documentation approaches, reward reports focus on “documenting what the system is trying to optimize for. In other words, what it’s trying to do, or what the reward is that it’s trying to maximize,” Gilbert said. Reward reports also aim to document how a system changes over time. “There needs to be a dynamic approach documentation, rather than just a static one,” Gilbert said.

The panelists reiterated that documentation of AI is essential, but that the work will take time and collaboration. “It’s a process and a journey,” Reynolds said. “People might take that as an excuse, but there isn’t a destination. There is, how we figure this out, and how do we figure it out together?”

Watch the full video of the panel above or on YouTube.