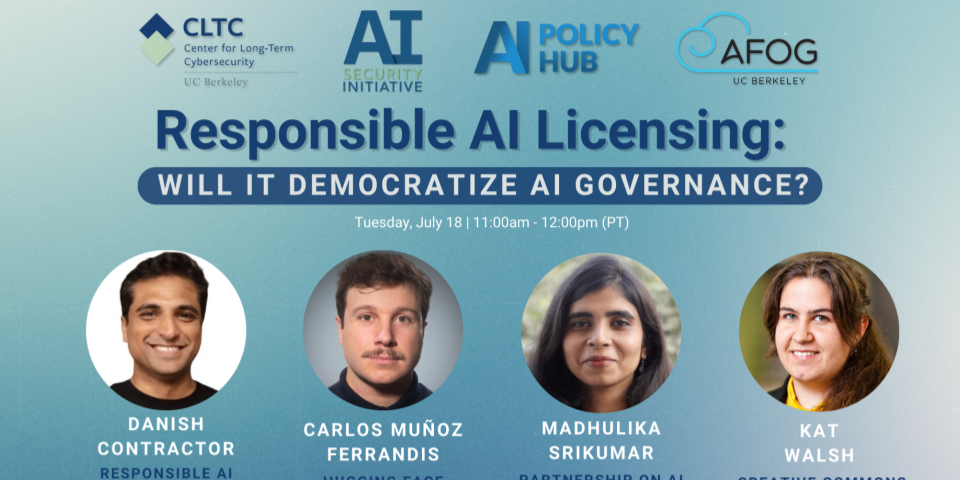

On July 18, 2023, the Center for Long-Term Cybersecurity (CLTC) and the Artificial Intelligence Security Initiative (AISI) hosted an online panel featuring volunteers from the Responsible AI Licensing (RAIL) initiative, a collective of volunteers dedicated to promoting the use of licensing as a means of promoting responsible AI. The panel was co-sponsored by the AI Policy Hub and the Algorithmic Fairness and Opacity Group (AFOG).

As AI systems are shared and deployed in the wild, developers often do not have a say in how their models should be used. RAIL licenses can help decentralize the definition of responsible use by allowing AI developers and other stakeholders in AI systems to include permissive- and restrictive-use clauses based on their own views of how the system(s) should be used or repurposed. Since its start in 2019, the initiative has made significant progress and gained traction among the developer community. The OpenRAIL License is now the second most-used license category on the HuggingFace hub, after permissive open source software licenses.

Co-hosted by Hanlin Li, a postdoctoral scholar at CLTC, and Jessica Newman, Director of the AISI, the panel brought together active volunteers from the RAIL initiative, as well as experts from law and open source on the benefits, limitations, and opportunities of RAIL. The panelists discussed emerging challenges, as well as what other responsible AI approaches can complement RAIL. The panel also touched on opportunities to foster the adoption of RAIL.

“With the emergence of large AI models over the past few months, from ChatGPT to Midjourney, we have seen a lot of different approaches to responsible AI, which has prompted our to look at responsive AI licensing,” Li said in an introduction to the panel.

Danish Contractor, an AI researcher who currently chairs the Responsible AI Licensing Initiative, as well as the IEEE-SA P2840 Working Group on Responsible AI Licensing, explained that the concept for RAIL “emerges from the need to think about the use or limitations of the technology that you’re releasing, be it a model, be it source code, or be it a combination of both.”

He added that different versions of the RAIL license have been developed for machine learning models, source code, and combinations of both. The “OpenRAIL” version of the license permits free distribution with no commercial restrictions, as long as uses restrictions are followed. “When you’re releasing something, think about the use, put in usage restrictions or limitations that are indicative of what you know about the technology, and make sure that anybody who’s using that technology downstream has to comply with those terms,” Contractor said.

Carlos Muñoz Ferrandis, a lawyer and researcher, serves as Tech & Regulatory Affairs Counsel at Hugging Face, which he explained is an “open ML platform where everyone can upload and download machine learning models, but also training data sets.” He cited examples of existing RAIL licenses, including BigScience OpenRAIL-M license, which is for use with natural language processing models, as well as other types of models, including multimodal generative models. The Big Code Open RAIL-M license, meanwhile, aims at the responsible development and use of large language models (LLMs) for code generation.

Ferrandis noted that other examples of RAIL-like licenses are emerging, including the license that Meta released alongside its Llama 2 model. “We are starting to see ML-specific licenses, not just traditional source code licenses, to license artifacts such as models, but also with some specific restrictions on uses of these new tools,” he said.

Kat Walsh, General Counsel at Creative Commons — and a coauthor of the version 4.0 of the CC licenses — said that “a lot of people have been asking us recently how [Creative Commons licenses] apply to AI and to materials that may be used to train AI, so we’re trying to figure out the best approach for licensing training data and other AI-related materials in service of an open commons and open and responsible sharing.”

Walsh noted that Creative Commons has not typically included parameters such as “code of conduct” in the licenses. “We support alternative approaches, such as responsible use policies or regulations regarding things like privacy, but not in the licenses themselves,” Walsh said. “We think that that copyright isn’t intended for that and doesn’t actually function that way…. The difficulty with licensing is that you want it to be interoperable with other materials, you want it to be a decision that won’t change. A lot of the ethical and usage standards should be more flexible, should be adapted to their communities, and should be able to change. So putting those usage restrictions in the license themselves would be pretty difficult.”

Madhulika Srikumar, Program and Research Lead for Safety Critical AI at the Partnership on AI, a global nonprofit shaping best practices to advance responsible AI, expressed optimism about the role of RAIL and other licenses in promoting responsible usage of AI. “RAIL can serve as a valuable tool for fostering community consensus, particularly within the research community, and regarding appropriate and inappropriate uses and limitations of AI models,” she said. “The licenses can provide a clear indication of the creator’s intentions, and can play a crucial role in shaping community norms over time.”

Yet at the same time, Srikumar said, licenses alone will not be enough to provide foundations for responsible AI usage. “Model licenses themselves are unlikely to be the primary means for establishing community consensus on responsible AI,” she said. “It can be very difficult for licenses alone to be the initial source of community consensus regarding societal impacts and misuses, or dual-use implications of AI. They are one component within a larger ecosystem of tools at our disposal.”