In 2024, UC Berkeley alum and cybersecurity expert Tim M. Mather ‘81 provided support through the Cal Cybersecurity Research Fellowship for a team of students at the School of Information (I School) to enter the Artificial Intelligence Cyber Challenge (AIxCC), a national competition sponsored by the Defense Advanced Research Projects Agency (DARPA). For the project, the team developed an automated, AI-based code repair solution that combines both vulnerability detection and patch generation.

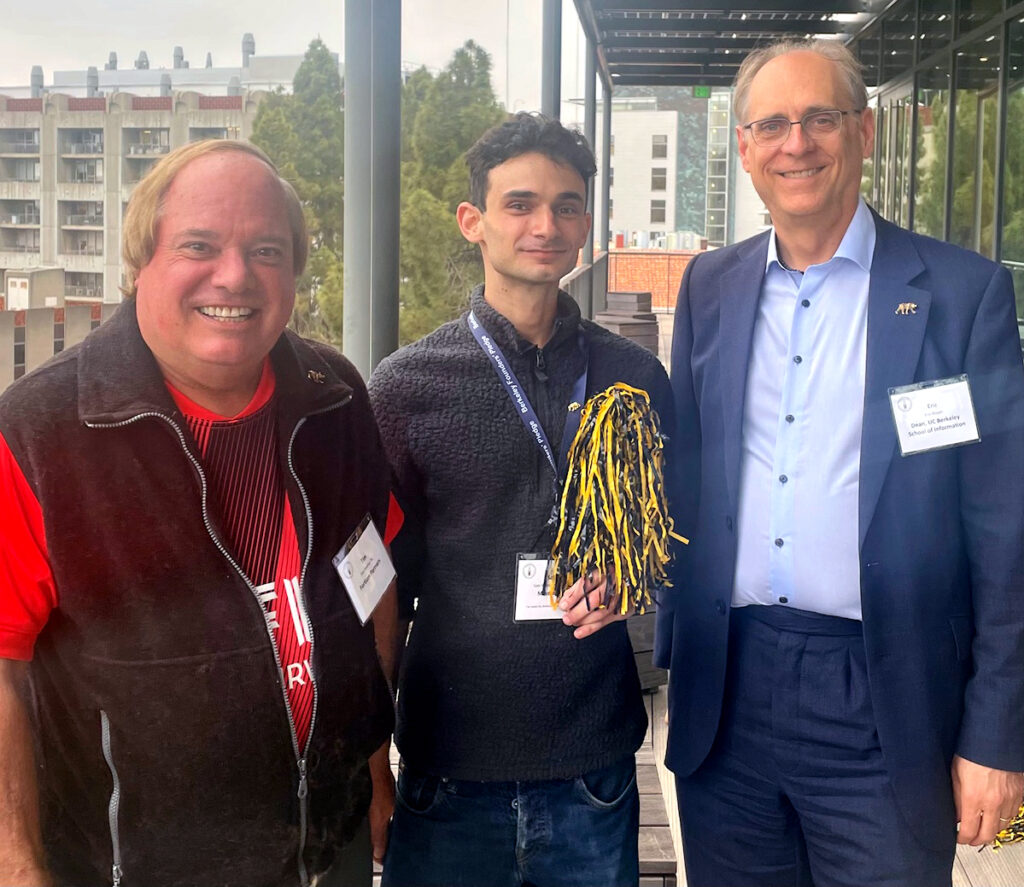

Now, one of the members of that team has co-founded a new company based on using AI agents as “hackers” that can detect and patch cybersecurity vulnerabilities. Samuel Berston, a graduate of the School of Information’s Master of Information and Cybersecurity (MICS) program, co-founded MindFort, which claims to have developed “the world’s first fully autonomous security agents” and promises to offer customers “the power of a thousand hackers at your fingertips.”

Earlier this year, MindFort received funding and support from Y Combinator, a leading start-up accelerator. Berston, together with two co-founders, began their three-month Y Combinator program in April, and they presented their product at a “Demo Day” on Friday, June 13.

Berston says his experience at the School of Information was instrumental in forging his path to becoming an entrepreneur, noting that courses taught by Napoleon Paxton, Kristy Westphal, and Sekhar Sarukkai were particularly influential. “The community and the people of the I School are what got me to dive deep into AI and cybersecurity, and to start doing the sorts of projects that led to MindFort,” Berston said. “The MICS environment is set up for people to bring ideas together, and I think that really breeds entrepreneurship and product ideas. Everyone comes from a different background, and you’re really encouraged to combine ideas. There’s no other way we could have come up with applying AI to be a hacker in a way that is also a software product.”

Berston agreed to sign the Founder’s Pledge, through which UC Berkeley entrepreneurs can make a nonbinding commitment to support UC Berkeley with a personally meaningful gift at some point in the future. “I signed the Founder’s Pledge and sincerely hope to support the CLTC’s mission of a safer cyberspace, which is highly aligned with MindFort’s mission of democratizing ethical hacking for organizations,” Berston said.

We spoke with Berston to tell us more about his experience transitioning from the I School to launching MindFort.

(Note: Interview has been edited for length and clarity.)

How did you connect with your co-founders at MindFort?

After the DARPA project, I was really motivated by the idea of using AI agents for cybersecurity. From my previous work at Salesforce and Tableau, I knew firsthand all the the enterprise pain points in software security. During the DARPA project, I saw the power of AI to automate a lot of cybersecurity, so I kept doing side projects and posting them on GitHub. The CEO of MindFort, Brandon Veiseh, found one of them, and he had a similar thought process of thinking, “this makes sense, this should be a no-brainer. Of course AI is going to change cybersecurity, so we just have to put these pieces together.” So he saw my project and wanted to connect. I would never have done it by myself, so it was really nice having someone ask me. Our third co-founder, Akul Gupta, was also thinking about how to use AI for white-hat hacking. It took a while of smashing our heads together before we really forced ourselves to sit down and build something, and by now it has gone through many iterations.

How did you get into Y Combinator?

Getting into Y Combinator was a complete surprise. We applied and initially got rejected; their email said, we’re not sure that what you’re trying to do is possible, it doesn’t seem fleshed out enough yet, but we like the idea overall. So they let us work for another two weeks and present what we had done. And after they saw it, they let us in. That lit the fire for us because we were like, okay, we know we can do this, we just haven’t taken the initiative to fully build all of it out yet. That’s when we built out our full prototype. I’m really proud of what we have. We have gotten our first letters of intent and contracts with real enterprise customers. Now the pace has changed. It’s not just me coding anymore.

How is MindFort different from other products in the market?

We’re putting so many pieces together that no one else has thought of. In my Y Combinator batch, there are at least five companies doing AI code review and AI QA of applications, and cybersecurity for AI agents and for ML models, but it’s really astounding that more companies are not putting the pieces together in this way, which is using AI not just to QA apps, but to QA their security and basically hack them. We are building a fully autonomous white-hat ethical hacker. We have one big competitor — Expo, a research lab in Europe — doing something similar to what we’re doing.

The whole agentic movement — plugging your code straight into the AI and letting it take more actions and decisions, letting it navigate the web pages, and letting it decide what it thinks it should do next — that was a paradigm shift. We don’t have to just use AI to make the existing processes better. We can program the AI to possibly just do everything and make a lot more of its own decisions. It’s still highly structured and it’s highly contained, and there are really strict safety guardrails in place to prevent any destructive actions or unethical behavior. That’s important to us.

Are you focused on apps or networks?

We are focusing on web apps. The problem of automating network penetration testing has been solved to an extent. There are a few companies that do it, and manual pen testing is never going to go away. But that problem is more based on mapping out the network, finding known vulnerabilities, and seeing if there’s an exploit available. No one has really been able to do web app automation because it requires you to, for example, figure out that a website is a banking website, and so maybe you try to transfer funds, or go into the back end and change the amount of money in an account, or tamper with the authorization flow or steal some credentials. It takes a lot more human context.

How do you ensure safety as the AI’s are “hacking” these web apps?

We start all of our testing on a WAST [web application security testing] test website, which we host ourselves. We have several layers of guardrails to prevent any destructive or unethical actions. First of all, the models are already well-trained. They would never do a DDoS [distributed denial of service attack] or a drop table or expose customer data. But we have additional layers, which is really strong prompt engineering and additional sanitization and validation of all of the agents’ actions within the environment. Our agent will never go to third-party websites, it will stay within the scope of the engagement and only test what it’s authorized to test. We have trained it so that it only does proof of concepts. It looks for the most basic level of evidence of a vulnerability, but it won’t proceed to extract or exfiltrate data if it realizes it has access. It will say, I’m 99% sure this is exploitable, but I’m not actually going to take all the data out of the database. I just saw that I could run commands in here, and that means that a hacker could eventually do something bad.

Your product also automates the patching of vulnerabilities. How does that work?

A big part of that was inspired from the DARPA project, because that was about full lifecycle code review and patching. That was the first thing I built in that realm. For the patching part of our system, we have our “red team,” which consists of multiple agents (currently 11) that hack a website live. You can use the browser to replay the session of them interacting with the site. At the end, they come up with all these findings. For example, they might see a business logic vulnerability in the product purchase page, where you can intercept a request and modify the price.

Currently, we have it set up so that all you have to do is give us your domain URL. You can optionally give us the code as well. If you do give us the code, then obviously the agent has a lot more context, and can see the exact code that is leading to the behavior on the website. But if you choose to remediate, it goes into the remediation agents part of our system. These take the findings from the red team — for example, if we find a business logic vulnerability that lets someone add a coupon to a gift card and give themselves infinite money — and then the remediation agents would take that finding, search your whole code base intelligently, find the code that’s creating this issue, and then hand it off to its patching agent, which will then generate a patch, run tests, and validate that it’s not changing the logic or breaking the application. Then it gives it to the engineer in a ticket.

We wanted to be able to give organizations a way that they could not have to guess through patterns, but actually have an AI that is just fully interacting with your website, almost exactly like a human. There are obviously differences. It’s not using a mouse, it’s interacting with a representation of the page. But there’s still proof that this is actually vulnerable and exploitable.

What is the next stage of the project?

We are almost done with the Y Combinator program, which ends on Demo Day, when all the investors come and watch your pitch. The goal of Y Combinator is to help you raise a round of seed financing so you can continue in your company. The Demo Day is the big opportunity to get all the investors to you. Typically fundraising is very difficult, but luckily, in Y Combinator, everyone comes to you. Because of the uniqueness of being a cybersecurity company in YC, we’re pretty close-knit, and because we’re doing things so differently that people have been coming to us out of curiosity. The most valuable parts have probably been the community. My entire career has been remote. There’s nothing that can replace that human aspect and just how motivated and inspired you become when you’re around other people. It’s also been really fun being with my co-founders in person. We’re basically all married and live in the same apartment.

We have just launched publicly, and so we are serving customers, which has been exciting and scary, because for the past week or two, we’ve been testing enterprise websites. One of our first customers is a big cloud storage provider, so everything they have is ultra-secure, and they have this really major firewall in place. I didn’t think I would have to spend so much time figuring out how to get around that. But that’s kind of the fun thing about startups. It feels like the problems pick you at this point. You don’t get to choose what you’re going work on anymore. It’s whatever’s broken, and we have a million things we want to do to make the system better, and we can only do one at a time.